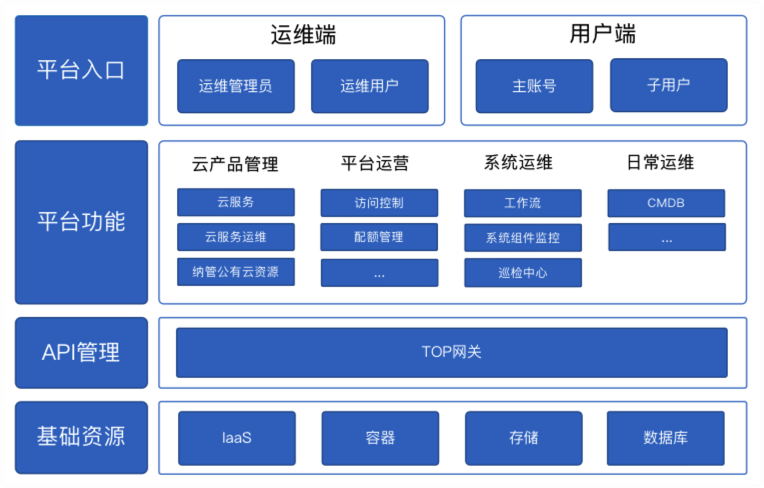

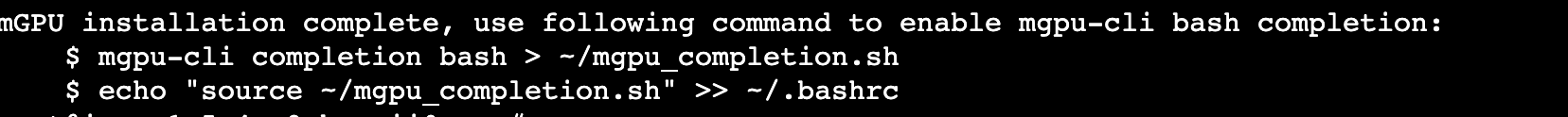

👉点击这里申请火山引擎VIP帐号,立即体验火山引擎产品>>>

背景信息

SDXL

Diffusers

软件要求

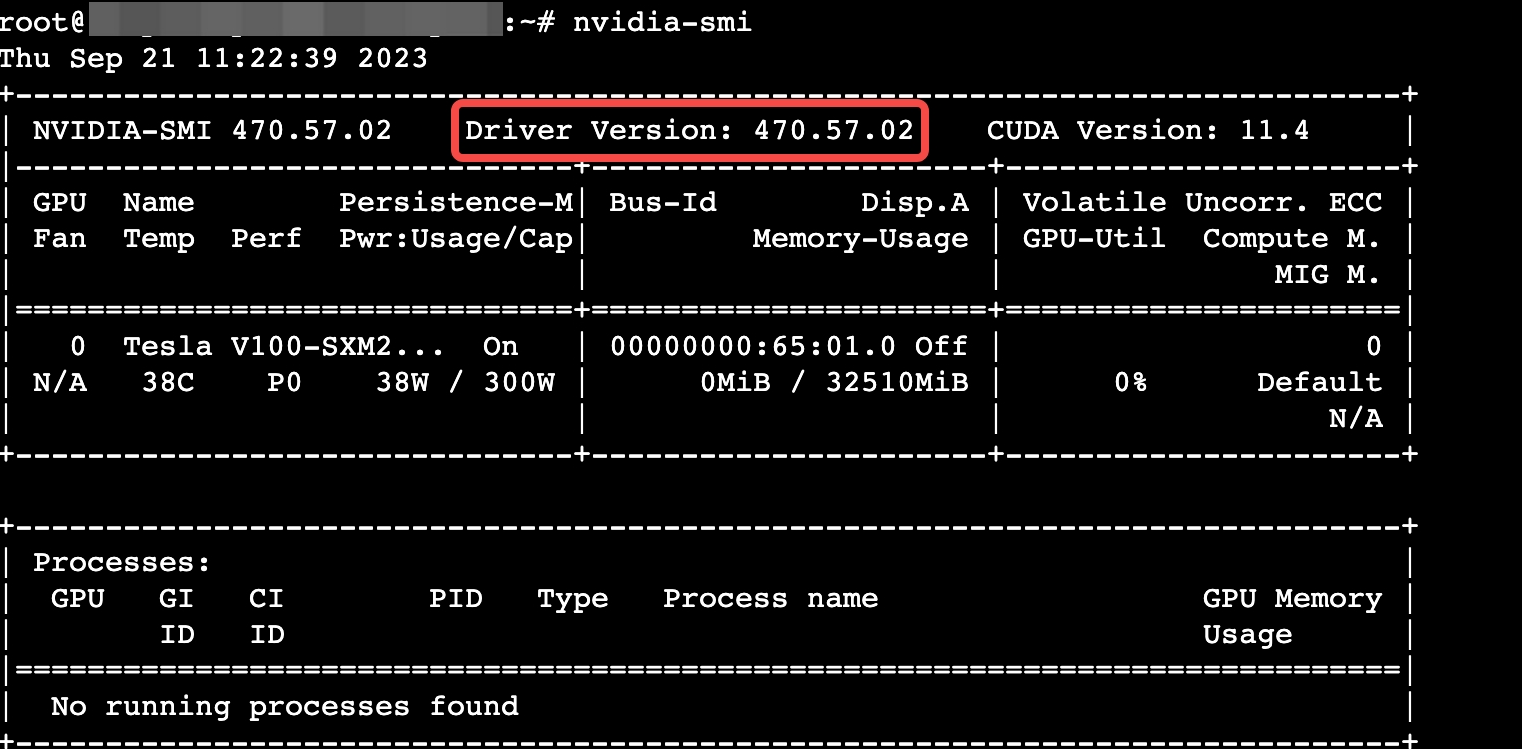

GPU驱动:用来驱动NVIDIA GPU卡的程序。本文以470.57.02为例。

Pytorch:开源的Python机器学习库,实现强大的GPU加速的同时还支持动态神经网络。本文以2.0.0为例。

Pytorch使用CUDA进行GPU加速时,在GPU驱动已经安装的情况下,依然不能使用,很可能是版本不匹配的问题,请严格关注虚拟环境中CUDA与Pytorch的版本匹配情况。

Anaconda:获取包且对包能够进行管理的工具,包含了Conda、Python在内的超过180个科学包及其依赖项,用于创建Python虚拟环境。本文以Anaconda 3和Python 3.10为例。

Gradio:快速构建机器学习Web展示页面的开源Python库。本文以3.43.2为例。

使用说明

操作步骤

步骤一:创建实例

请参考通过向导购买实例创建一台符合以下条件的实例:

基础配置:

计算规格:ecs.g1ve.2xlarge

镜像:Ubuntu 20.04,并勾选“后台自动安装GPU驱动”。

存储:云盘容量在100 GiB以上。

网络配置:勾选“分配弹性公网IP”。

创建成功后,在实例绑定的安全组中添加入方向规则:放行TCP 8000端口。具体操作请参见修改安全组访问规则。

登录实例。

执行以下命令,确认GPU驱动是否安装。

步骤二:准备虚拟环境

执行以下命令,下载Anaconda安装包。

执行以下命令,静默安装Anaconda。

在静默模式下安装Anaconda时,将使用默认设置,包括安装路径(/root/anaconda3)和环境变量设置。如果您需要自定义这些设置,请使用交互式安装程序。

安装完成后执行以下命令,初始化Anaconda。

source /root/anaconda3/bin/activateconda init

执行source ~/.bashrc命令,使配置文件生效。

创建一个名为“sd-xl”的虚拟环境,并指定该环境中的python版本为3.10。

执行conda create -n sd-xl python=3.10命令。

回显Proceed ([y]/n)?时输入“y”确认。

执行以下命令,激活虚拟环境。

执行以下命令,安装git。回显Proceed ([y]/n)?时输入“y”确认安装。

执行以下命令,安装CUDA 11.8对应的Pytorch。

本文所示“sd-xl”环境中使用的CUDA版本为11.8。您也可以自行指定CUDA版本并登录Pytorch官网,在Conda中查找与CUDA版本匹配的安装命令。

conda install pytorch==2.0.0 torchvision==0.15.0 torchaudio==2.0.0 pytorch-cuda=11.8 -c pytorch -c nvidia

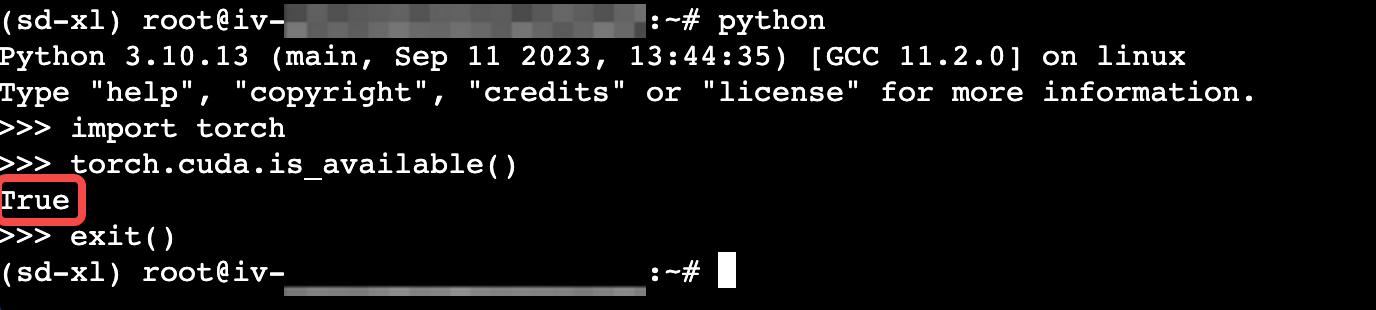

执行以下命令,检查虚拟环境是否符合预期。

python>>>import torch>>>torch.cuda.is_available()

步骤三:模型部署

依次执行以下命令,下载base模型的权重文件。

mkdir -p /root/sdcd sdapt install -y git-lfsgit lfs installgit clone https://huggingface.co/stabilityai/stable-diffusion-xl-base-1.0

依次执行以下命令,下载refiner模型的权重文件。

cd /root/sdgit lfs installgit clone https://huggingface.co/stabilityai/stable-diffusion-xl-refiner-1.0

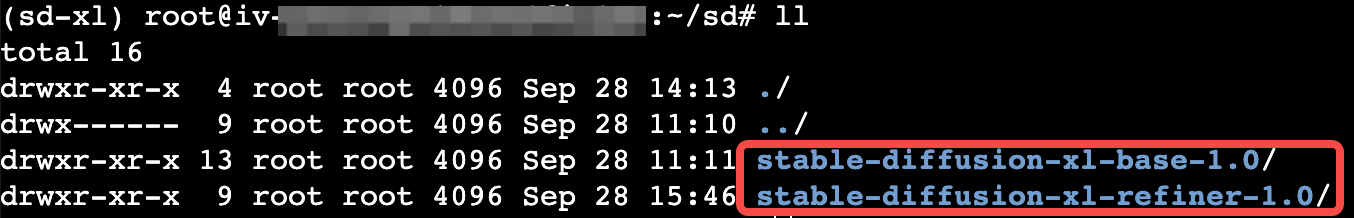

执行ll命令查看目录中包含如下文件,表示已成功下载。

步骤四:模型推理

txt2img(文生图)示例

依次执行以下命令,安装相关依赖组件。

pip install diffusers --upgradepip install transformers accelerate safetensors

编写推理脚本。

依次执行以下命令,创建cli_txt2img.py文件。

cd /root/sdvim cli_txt2img.py

添加如下内容。

from diffusers import DiffusionPipelineimport torchbase = DiffusionPipeline.from_pretrained("/root/sd/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16, use_safetensors=True, variant="fp16")base.to("cuda")refiner = DiffusionPipeline.from_pretrained("/root/sd/stable-diffusion-xl-refiner-1.0", text_encoder_2=base.text_encoder_2,vae=base.vae, torch_dtype=torch.float16, use_safetensors=True, variant="fp16",)refiner.to("cuda")# Define how many steps and what % of steps to be run on each experts (80/20) heren_steps = 40high_noise_frac = 0.8prompt = "Elon Musk standing in a workroom, in the style of industrial machinery aesthetics, deutscher werkbund, uniformly staged images, soviet, light indigo and dark bronze, new american color photography, detailed facial features"negative_prompt= "(EasyNegative),(watermark), (signature), (sketch by bad-artist), (signature), (worst quality), (low quality), (bad anatomy), NSFW, nude, (normal quality)"# run both expertsimage = base(prompt=prompt,negative_prompt=negative_prompt,num_inference_steps=n_steps,denoising_end=high_noise_frac,output_type="latent",).imagesimage = refiner(prompt=prompt,negative_prompt=negative_prompt,num_inference_steps=n_steps,denoising_start=high_noise_frac,image=image,).images[0]image.save("/root/sd/test.png")按esc退出编辑模式,输入:wq并回车退出当前文件。

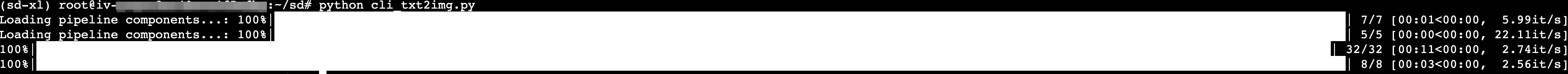

执行以下命令,运行脚本文件。

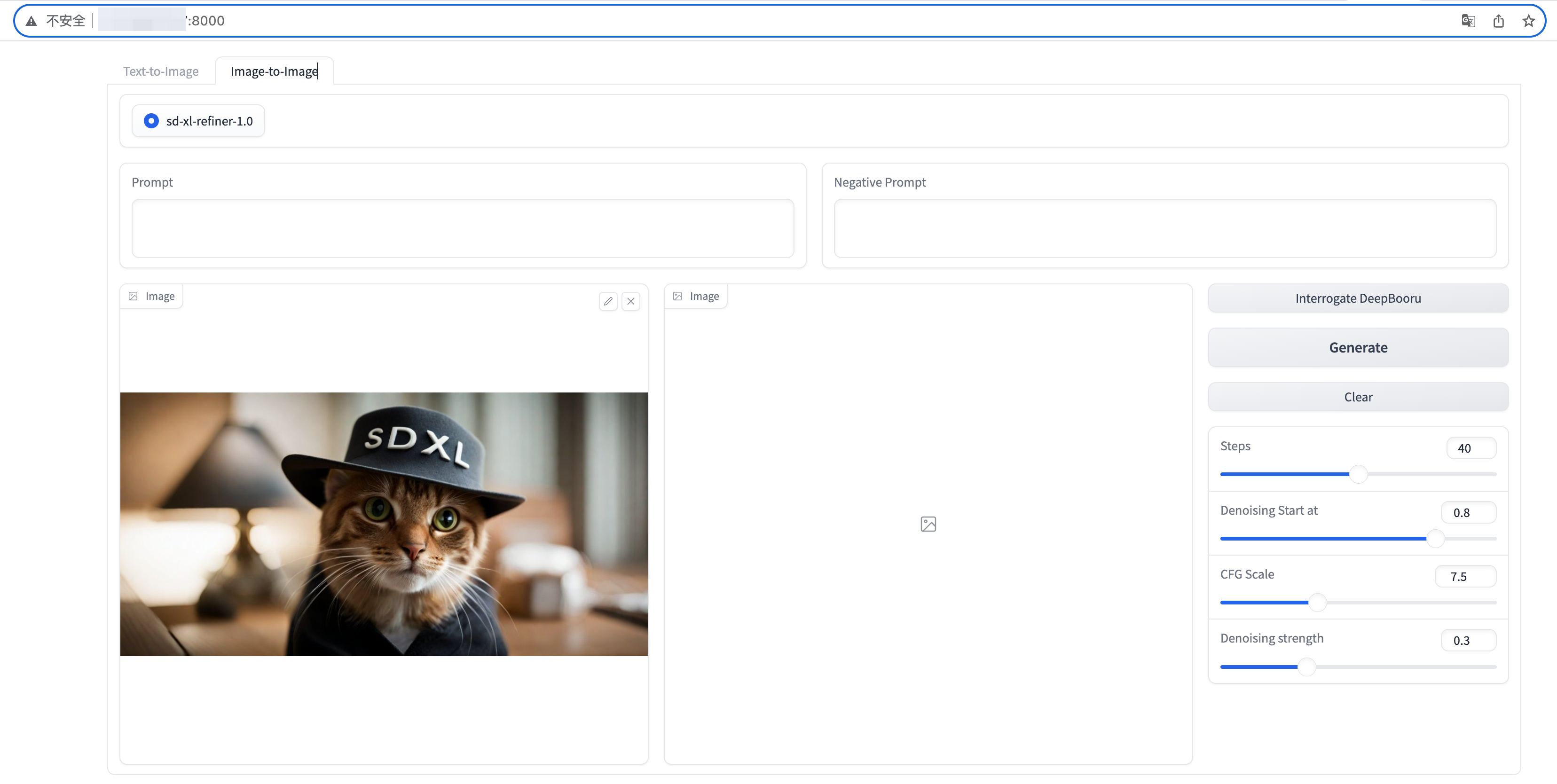

txt2img + img2img示例

依次执行以下命令,安装Gradio和相关依赖。

pip install diffusers --upgradepip install transformers accelerate safetensors gradio

依次执行以下命令,下载反向提示词模型文件及模型代码。

cd /root/sdgit clone https://github.com/AUTOMATIC1111/TorchDeepDanbooru.gitcd TorchDeepDanbooruwget https://github.com/AUTOMATIC1111/TorchDeepDanbooru/releases/download/v1/model-resnet_custom_v3.pt

编写推理脚本。

依次执行以下命令,创建web_sdxl_demo.py文件。

cd /root/sdvim web_sdxl_demo.py

添加如下内容。该脚本包含了txt2img、img2img相关功能。

import numpy as npimport gradio as grfrom diffusers import DiffusionPipeline,StableDiffusionXLImg2ImgPipelineimport torchimport tqdmfrom datetime import datetimefrom TorchDeepDanbooru import deep_danbooru_modelMODEL_BASE = "/root/sd/stable-diffusion-xl-base-1.0"MODEL_REFINER = "/root/sd/stable-diffusion-xl-refiner-1.0"print("Loading model",MODEL_BASE)base = DiffusionPipeline.from_pretrained(MODEL_BASE, torch_dtype=torch.float16, use_safetensors=True, variant="fp16")base.to("cuda")print("Loading model",MODEL_REFINER)refiner = StableDiffusionXLImg2ImgPipeline.from_pretrained(MODEL_REFINER, text_encoder_2=base.text_encoder_2,vae=base.vae, torch_dtype=torch.float16, use_safetensors=True, variant="fp16",)refiner.to("cuda")# Define how many steps and what % of steps to be run on each experts (80/20) here# base-high noise, refiner-low noise# the base model steps = default_n_steps*default_high_noise_fracdefault_n_steps = 40default_high_noise_frac = 0.8default_num_images =2def predit_txt2img(prompt,negative_prompt,model_selected,num_images,n_steps, high_noise_frac,cfg_scale):# run both expertsstart = datetime.now()num_images=int(num_images)n_steps=int(n_steps)prompt, negative_prompt = [prompt] * num_images, [negative_prompt] * num_imagesimages_list = []model_selected = model_selectedhigh_noise_frac=float(high_noise_frac)cfg_scale=float(cfg_scale)g = torch.Generator(device="cuda")if model_selected == "sd-xl-base-1.0" or model_selected == "sd-xl-base-refiner-1.0":images = base(prompt=prompt,negative_prompt=negative_prompt,num_inference_steps=n_steps,denoising_end=high_noise_frac,guidance_scale=cfg_scale,output_type="latent" if model_selected == "sd-xl-base-refiner-1.0" else "pil",generator=g).imagesif model_selected == "sd-xl-base-refiner-1.0":images = refiner(prompt=prompt,negative_prompt=negative_prompt,num_inference_steps=n_steps,denoising_start=high_noise_frac,guidance_scale=cfg_scale,image=images,).imagesfor image in images:images_list.append(image)torch.cuda.empty_cache()cost_time=(datetime.now()-start).secondsprint(f"cost time={cost_time},{datetime.now()}")return images_listdef predit_img2img(prompt, negative_prompt,init_image, model_selected,n_steps, high_noise_frac,cfg_scale,strength):start = datetime.now()prompt = promptnegative_prompt =negative_promptmodel_selected = model_selectedinit_image = init_imagen_steps=int(n_steps)high_noise_frac=float(high_noise_frac)cfg_scale=float(cfg_scale)strength=float(strength)if model_selected == "sd-xl-refiner-1.0":images = refiner(prompt=prompt,negative_prompt=negative_prompt,num_inference_steps=n_steps,denoising_start=high_noise_frac,guidance_scale=cfg_scale,strength = strength,image=init_image,# target_size = (1024, 1024)).imagestorch.cuda.empty_cache()cost_time=(datetime.now()-start).secondsprint(f"cost time={cost_time},{datetime.now()}")return images[0]def interrogate_deepbooru(pil_image, threshold):threshold =0.5model = deep_danbooru_model.DeepDanbooruModel()model.load_state_dict(torch.load('/root/ai/sd/TorchDeepDanbooru/model-resnet_custom_v3.pt'))model.eval().half().cuda()pic = pil_image.convert("RGB").resize((512, 512))a = np.expand_dims(np.array(pic, dtype=np.float32), 0) / 255with torch.no_grad(), torch.autocast("cuda"):x = torch.from_numpy(a).cuda()# first runy = model(x)[0].detach().cpu().numpy()# measure performancefor n in tqdm.tqdm(range(10)):model(x)result_tags_out = []for i, p in enumerate(y):if p >= threshold:result_tags_out.append(model.tags[i])print(model.tags[i], p)prompt = ', '.join(result_tags_out).replace('_', ' ').replace(':', ' ')print(f"prompt={prompt}")return promptdef clear_txt2img(prompt, negative_prompt):prompt = ""negative_prompt = ""return prompt, negative_promptdef clear_img2img(prompt, negative_prompt, image_input,image_output):prompt = ""negative_prompt = ""image_input = Noneimage_output = Nonereturn prompt, negative_prompt,image_input,image_outputwith gr.Blocks(title="Stable Diffusion",theme=gr.themes.Default(primary_hue=gr.themes.colors.blue))as demo:with gr.Tab("Text-to-Image"):# gr.Markdown("Stable Diffusion XL Base + Refiner.")model_selected = gr.Radio(["sd-xl-base-refiner-1.0","sd-xl-base-1.0"],show_label=False, value="sd-xl-base-refiner-1.0")with gr.Row():with gr.Column(scale=4):prompt = gr.Textbox(label= "Prompt",lines=3)negative_prompt = gr.Textbox(label= "Negative Prompt",lines=1)with gr.Row():with gr.Column():n_steps=gr.Slider(20, 60, value=default_n_steps, label="Steps", info="Choose between 20 and 60")high_noise_frac=gr.Slider(0, 1, value=0.8, label="Denoising Start at")with gr.Column():num_images=gr.Slider(1, 3, value=default_num_images, label="Gernerated Images", info="Choose between 1 and 3") #num images=4,A10报显存溢出cfg_scale=gr.Slider(1, 20, value=7.5, label="CFG Scale")with gr.Column(scale=1):with gr.Row():txt2img_button = gr.Button("Generate",size="sm")clear_button = gr.Button("Clear",size="sm")gallery = gr.Gallery(label="Generated images", show_label=False, elem_id="gallery",columns=int(num_images.value), height=800,object_fit='fill')txt2img_button.click(predit_txt2img, inputs=[prompt, negative_prompt, model_selected,num_images,n_steps, high_noise_frac,cfg_scale], outputs=[gallery])clear_button.click(clear_txt2img, inputs=[prompt, negative_prompt], outputs=[prompt, negative_prompt])with gr.Tab("Image-to-Image"):model_selected = gr.Radio(["sd-xl-refiner-1.0"],value="sd-xl-refiner-1.0",show_label=False)with gr.Row():with gr.Column(scale=1):prompt = gr.Textbox(label= "Prompt",lines=2)with gr.Column(scale=1):negative_prompt = gr.Textbox(label= "Negative Prompt",lines=2)with gr.Row():with gr.Column(scale=3):image_input = gr.Image(type="pil",height=512)with gr.Column(scale=3):image_output = gr.Image(height=512)with gr.Column(scale=1):img2img_deepbooru = gr.Button("Interrogate DeepBooru",size="sm")# img2img_clip = gr.Button("Interrogate CLIP",size="sm")img2img_button = gr.Button("Generate",size="lg")clear_button = gr.Button("Clear",size="sm")n_steps=gr.Slider(20, 60, value=40, step=10,label="Steps")high_noise_frac=gr.Slider(0, 1, value=0.8, step=0.1,label="Denoising Start at")cfg_scale=gr.Slider(1, 20, value=7.5, step=0.1,label="CFG Scale")strength=gr.Slider(0, 1, value=0.3,step=0.1,label="Denoising strength")img2img_deepbooru.click(fn=interrogate_deepbooru, inputs=image_input,outputs=[prompt])img2img_button.click(predit_img2img, inputs=[prompt, negative_prompt, image_input, model_selected, n_steps, high_noise_frac,cfg_scale,strength], outputs=image_output)clear_button.click(clear_img2img, inputs=[prompt, negative_prompt, image_input], outputs=[prompt, negative_prompt, image_input,image_output])if __name__ == "__main__":demo.launch(server_name="0.0.0.0", server_port=8000)按esc退出编辑模式,输入:wq并回车退出当前文件。

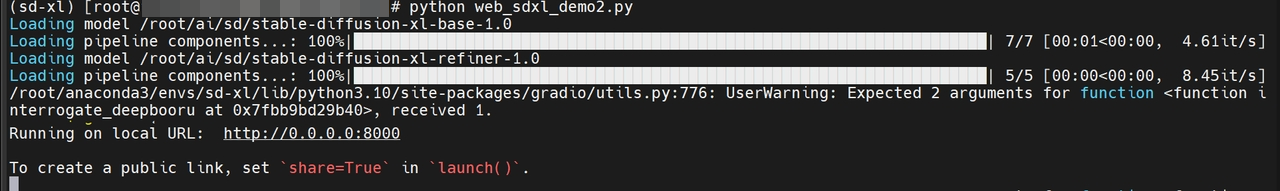

执行以下命令,运行脚本文件。

浏览器访问http://<公网IP>:8000/,可以在页面上调节相关参数,生成不同图片。